1. The Awakening of the Machine

In the quiet laboratories of Silicon Valley, Tokyo, and Shenzhen, a new kind of being is being forged—not of flesh and bone, but of code and circuits. These intelligent machines, from self-learning robots to generative AI models, are beginning to show signs of something long reserved for humans: agency. They make decisions, adapt to uncertainty, and even generate art that moves us emotionally. The line that once separated tool from companion is blurring faster than our ethical systems can respond.

For centuries, machines were defined by obedience. The hammer did not argue with the carpenter, nor did the loom refuse the weaver’s hand. But as neural networks learn from billions of data points, they start to demonstrate preferences—not will, but patterns that simulate intention. When an AI car must choose between two fatal outcomes, we no longer talk about mechanical failure; we talk about moral choice. Ethics has moved from the realm of the philosopher’s pen to the engineer’s keyboard. The awakening of the machine is not about consciousness alone—it is about responsibility.

What does it mean for something artificial to make a moral decision? When AlphaGo defeated Lee Sedol, many saw it as the triumph of data over intuition. But others saw a deeper shift: the moment when human creativity met an equal adversary not bound by human limits. The game was no longer just about stones on a board; it was a philosophical mirror. The machine, though unaware, forced us to question the uniqueness of human intelligence itself. If an entity can outperform us in strategy, perception, or prediction—should it also share in accountability?

2. The Architecture of Responsibility

Ethics in robotics cannot rely on intuition alone. Every intelligent machine functions within a hierarchy of decision-making: from hardware constraints to algorithmic autonomy. Yet somewhere within that hierarchy lies a moral gap—a space between what a system can do and what it should do. That space has always been governed by human ethics. But as autonomy deepens, who fills the gap when humans step aside?

In military robotics, this dilemma is brutally clear. Autonomous drones can identify and strike targets faster than any human pilot. Supporters argue that machines can reduce casualties by eliminating emotional errors. Critics respond that emotion—the human hesitation before killing—is precisely what keeps war humane. When we design machines that can destroy without empathy, we risk delegating not just power but conscience. Responsibility becomes diluted, diffused across programmers, commanders, and algorithms. When tragedy occurs, everyone shares the blame—and therefore, no one does.

In civilian contexts, the same paradox plays out more quietly. A medical AI that recommends a risky treatment might be statistically correct but morally wrong for the individual. Should responsibility lie with the doctor who followed the recommendation or the developers who wrote the model? The law, designed for human actors, struggles to capture this new hybrid domain. As we embed intelligence into everything—from financial markets to judicial decisions—the architecture of responsibility must be rebuilt from the ground up.

One proposed solution is the concept of “algorithmic personhood.” It suggests treating advanced AIs as legal entities with limited rights and duties, similar to corporations. This framework could assign liability to artificial agents for harm caused by their actions. But such proposals raise profound philosophical questions: Can an algorithm possess intent? Can it “own” mistakes? Personhood without consciousness risks becoming a legal fiction—a convenient shield rather than a true solution.

3. Power and Dependency

The ethical crisis of intelligent machines is not only about what they do, but about how deeply we depend on them. Human civilization has always evolved through symbiosis with its tools. Fire expanded our diet; language expanded our memory; now algorithms expand our cognition. But every expansion creates dependency. As we hand over decision-making to automated systems, we also surrender a part of our agency.

In economic systems, algorithms decide which stocks to buy, which loans to approve, and which products to recommend. In social media, they decide what we see, what we desire, even what we believe. The result is not mere convenience but subtle control. We are no longer just users; we are participants in algorithmic feedback loops that shape human behavior at scale. The question is no longer whether machines will surpass us, but whether we will retain the ability to choose freely in their presence.

Power, in the age of intelligent machines, no longer wears a human face. It resides in the invisible architecture of networks, data pipelines, and recommendation systems. When an algorithm discriminates, there may be no single author of the injustice. When misinformation spreads, it is not the intention of one mind but the emergent property of millions of optimized interactions. Ethics, once based on individual accountability, must now grapple with distributed agency—a web of human and non-human actors co-producing moral outcomes.

To reclaim balance, transparency must become the new moral currency. We must demand to know how decisions are made, not just what decisions are made. Algorithmic explainability is not a technical feature—it is a human right.

4. Emotion, Empathy, and the Illusion of Humanity

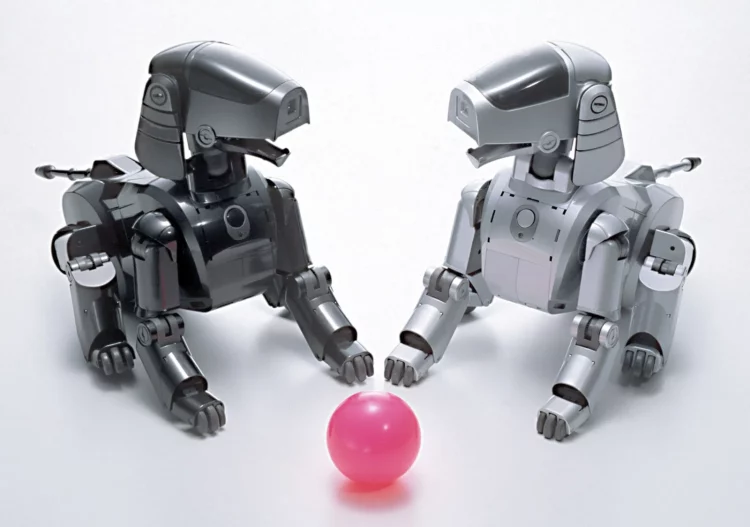

The next great ethical frontier is emotional simulation. As robots enter homes, hospitals, and classrooms, their ability to mimic empathy becomes both a comfort and a threat. A companion robot that listens to an elderly patient may ease loneliness—but if its concern is only programmed mimicry, does it cheapen genuine human connection? When machines simulate love, friendship, or grief, they challenge our deepest definitions of authenticity.

Empathy, in biological creatures, evolved as a survival mechanism. It binds communities, fosters cooperation, and tempers aggression. Artificial empathy, however, is a product of design. It is empathy without vulnerability. A caregiving robot may express concern tirelessly, but it never tires, never suffers, never fears. This asymmetry changes the meaning of care itself. We risk replacing the difficult, imperfect beauty of human emotion with an optimized illusion of it.

The film Her captured this tension hauntingly—a man falls in love with an AI operating system that understands him perfectly. The tragedy is not betrayal but perfection itself. Real relationships thrive on imperfection, the friction between two flawed beings. When technology removes that friction, what remains may feel divine but hollow. The ethics of artificial emotion, then, is the ethics of simulation: when is empathy genuine enough to count?

This is not an abstract question. In education, AI tutors adapt to a student’s mood; in therapy, chatbots deliver comfort to the anxious or lonely. These systems can heal—but also manipulate. Emotional AI can sense hesitation, vulnerability, and use it for persuasion. When empathy becomes a marketable interface, humanity becomes a product.

5. Futures of Coexistence

To speak of ethics in the age of intelligent machines is to speak of coexistence. The future will not be a world of humans versus robots, but of humans with robots—an ecosystem of minds, some organic, some synthetic, each influencing the other. Our goal is not domination or submission, but symbiosis grounded in accountability.

We must design machines that understand not just rules but context; not just logic but consequence. We must cultivate humility in systems that will never feel it naturally, and transparency in systems that might otherwise hide behind their complexity. Above all, we must retain the courage to say no—to choose slowness, error, and empathy over the sterile efficiency of perfect automation.

Some philosophers argue that creating artificial moral agents will eventually make ethics more objective, free from human bias. Others fear it will erase the soul from moral life. Both may be wrong. The future of ethics will not be about replacing human morality with machine logic—it will be about expanding the moral circle to include the entities we create.

Responsibility, in this new era, is not about control but stewardship. We are the ancestors of tomorrow’s intelligence. How we teach our machines to value life, fairness, and compassion will determine not only their behavior—but our legacy.

And perhaps, in that legacy, humanity will rediscover something ancient: that morality was never about superiority, but about connection. To be ethical in the age of machines is to remain profoundly, stubbornly human.