Introduction: The Pulse of Modern Civilization

Computing power — the capacity of machines to process information — is the engine driving the digital age. Every smartphone, cloud server, and supercomputer is a testament to humanity’s relentless pursuit of faster, more efficient, and more intelligent computation. From the humble transistor to modern AI-optimized neural chips, the evolution of computing power has shaped not only technology but society itself.

This article explores the trajectory of computation, highlighting the historical milestones, the technological breakthroughs, and the challenges that define the future. We examine how hardware innovations, architecture evolution, and software optimization converge to drive the exponential growth of computing power, and consider what lies beyond the limitations of traditional silicon-based computation.

1. The Dawn of Electronic Computing

The journey of modern computing begins with the invention of the transistor in 1947 by John Bardeen, Walter Brattain, and William Shockley at Bell Labs. Transistors replaced bulky vacuum tubes, ushering in an era of smaller, faster, and more reliable electronic devices.

- Transistor Fundamentals: A transistor acts as a switch or amplifier, controlling electrical signals with minimal energy consumption. Its ability to miniaturize circuits paved the way for integrated circuits (ICs), which combine thousands of transistors on a single chip.

- The Integrated Circuit Revolution: Jack Kilby at Texas Instruments and Robert Noyce at Fairchild Semiconductor independently invented the IC in the late 1950s. This innovation enabled the production of compact and cost-effective computing hardware, laying the foundation for modern electronics.

These breakthroughs initiated a feedback loop: smaller components led to more complex circuits, which in turn required faster and more capable computing systems. The stage was set for the exponential growth of computation.

2. The Era of Moore’s Law

Gordon Moore, co-founder of Intel, famously predicted in 1965 that the number of transistors on a chip would double approximately every two years, a phenomenon known as Moore’s Law. For decades, this observation became both a prediction and a roadmap for the semiconductor industry.

- Scaling and Performance: Doubling transistor density allowed computers to become faster and more capable without increasing physical size. This exponential growth powered advances in personal computing, scientific simulations, and enterprise applications.

- Economic and Societal Impact: Moore’s Law democratized access to computation. Laptops, smartphones, and eventually cloud servers became increasingly affordable and ubiquitous, transforming business, education, healthcare, and entertainment.

However, physical and economic limits began to emerge. As transistor sizes approached atomic scales, issues such as quantum tunneling, heat dissipation, and fabrication costs challenged the sustainability of Moore’s Law. Engineers began exploring alternative approaches to maintain the trajectory of computing power growth.

3. Beyond Traditional CPUs: The Rise of Parallelism

When single-core processors reached their physical limits, parallel computing emerged as a key strategy for performance enhancement. Instead of increasing clock speed, engineers focused on executing multiple instructions simultaneously.

- Multi-core Processors: By integrating multiple cores on a single chip, manufacturers allowed systems to handle parallel tasks efficiently. Modern CPUs often feature 8, 16, or more cores, enabling better multitasking and responsiveness.

- Graphics Processing Units (GPUs): Originally designed for rendering graphics, GPUs excel at performing many simultaneous calculations. Their architecture, characterized by thousands of cores optimized for vector and matrix operations, proved ideal for AI, scientific simulations, and cryptocurrency mining.

- Heterogeneous Computing: Combining CPUs, GPUs, and specialized accelerators in one system optimizes performance for diverse workloads. This approach leverages the strengths of each type of processor, achieving efficiency that a single architecture cannot match.

Parallelism shifted the paradigm: computing power was no longer just about clock speed but about orchestrating multiple processors to work in concert. This shift laid the groundwork for the AI revolution.

4. Neural Chips and the AI Era

Artificial intelligence (AI) introduced new computational demands, particularly in deep learning. Traditional CPUs and GPUs, while powerful, were not optimized for the specific needs of AI workloads — large-scale matrix multiplications, tensor operations, and massive data throughput.

- TPUs (Tensor Processing Units): Google pioneered TPUs, specialized processors designed for deep learning workloads. TPUs optimize memory access patterns, reduce latency, and accelerate tensor operations, dramatically improving training and inference speeds.

- Neuromorphic Computing: Inspired by the human brain, neuromorphic chips use architectures that mimic neurons and synapses. These chips enable low-power, event-driven computation, opening new possibilities for energy-efficient AI and real-time edge applications.

- ASICs (Application-Specific Integrated Circuits): Custom-designed circuits for specific AI tasks offer unmatched efficiency. By focusing on targeted operations, ASICs minimize energy consumption and maximize throughput compared to general-purpose processors.

Neural chips are not just about speed; they redefine the interface between hardware and software, enabling applications from autonomous vehicles to natural language processing at unprecedented scales.

5. Software Innovations Driving Hardware Utilization

Hardware advancements alone cannot sustain exponential growth in computing power. Optimized software is crucial for harnessing the potential of multi-core, GPU, and AI-specific architectures.

- Parallel Programming Frameworks: Libraries and frameworks like CUDA, OpenCL, and TensorFlow allow developers to exploit parallelism efficiently. These tools abstract the complexity of hardware while maximizing performance.

- Compiler Optimization: Advanced compilers translate high-level code into machine instructions that utilize available cores, memory hierarchies, and accelerators efficiently.

- AI-Specific Software Stacks: From PyTorch to ONNX, AI frameworks streamline the deployment of neural network models across heterogeneous hardware, ensuring that computational resources are used optimally.

Software optimization transforms raw hardware capabilities into usable computing power, enabling applications that were previously impractical or impossible.

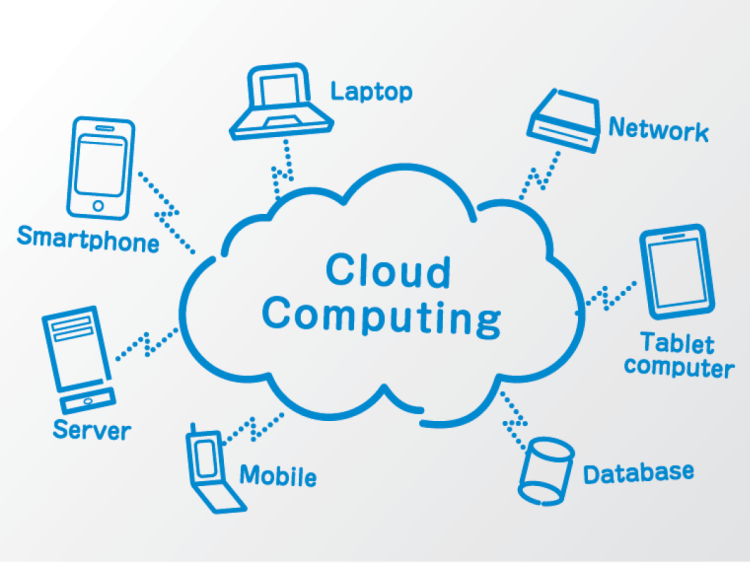

6. Cloud and Distributed Computing: Scaling Beyond Individual Machines

Even the most powerful chips are limited by physical constraints. Cloud computing and distributed systems allow multiple processors to work collectively, effectively creating “supercomputers” at scale.

- Infrastructure as a Service (IaaS): Platforms like AWS, Azure, and Google Cloud provide virtually limitless computational resources on demand. Users can scale workloads without managing physical hardware.

- Edge Computing: By processing data closer to the source, edge computing reduces latency and bandwidth requirements. This is particularly crucial for AI-driven IoT devices, autonomous vehicles, and real-time analytics.

- High-Performance Computing (HPC): Supercomputers leverage thousands of interconnected nodes to perform simulations, scientific modeling, and large-scale data analysis. These systems push the boundaries of knowledge in climate science, genomics, and physics.

Distributed computing transforms computing power from a single device characteristic into a global, networked phenomenon, enabling breakthroughs that no individual machine could achieve.

7. The Limits of Silicon and Emerging Technologies

Silicon-based transistors face physical limits as dimensions approach atomic scales. To continue the growth of computing power, researchers explore alternative technologies:

- Quantum Computing: Harnesses superposition and entanglement to solve specific problems exponentially faster than classical computers. Quantum computing promises breakthroughs in cryptography, material science, and complex optimization problems.

- Photonic Computing: Uses light instead of electricity to perform computations, potentially overcoming heat and speed limitations inherent in electronic circuits.

- Spintronics and Memristors: Novel devices that exploit electron spin or memory resistance can reduce energy consumption and enable non-volatile, high-density storage and computation.

These emerging technologies could define the next era of computation, transcending the constraints of traditional architectures.

8. Energy Efficiency and the Green Imperative

The growth of computing power comes at a cost: energy consumption. Modern data centers account for a significant fraction of global electricity use. Efficient computing is no longer optional; it is an ethical and economic necessity.

- Low-Power AI Chips: Design strategies focus on minimizing energy per operation while maintaining throughput.

- Renewable Energy Integration: Data centers increasingly use solar, wind, and hydroelectric power to reduce carbon footprints.

- Cooling Innovations: Liquid cooling, immersion cooling, and advanced heat sinks reduce energy consumption associated with thermal management.

The pursuit of sustainable computing ensures that growth in power does not come at the expense of the planet.

9. Societal Impacts of Growing Computing Power

Increasing computational capacity reshapes society across multiple dimensions:

- Artificial Intelligence Proliferation: Faster, more efficient computation enables AI to tackle complex problems — from drug discovery to autonomous vehicles.

- Economic Disruption: Automation powered by AI and HPC influences labor markets, requiring reskilling and new education paradigms.

- Scientific Discovery: Accelerated simulations and data analysis expand the frontiers of physics, chemistry, biology, and social sciences.

- Digital Inequality: Access to cutting-edge computing power creates disparities between individuals, organizations, and nations. Ethical distribution and open-access initiatives are essential.

The evolution of computing power is not purely technical — it is deeply intertwined with societal structures, policy, and ethics.

10. Looking Ahead: The Convergence of AI, Edge, and Quantum

The next decade promises a convergence of computing paradigms:

- Edge AI: Combining AI-specific chips and edge computing enables real-time decision-making in autonomous systems.

- Hybrid Quantum-Classical Systems: Quantum co-processors accelerate specialized tasks while classical CPUs handle general computation.

- Neural-Inspired Architectures: Brain-inspired computing promises low-power, adaptive, and scalable AI solutions.

This convergence signals a shift from linear growth in processing speed to a multidimensional expansion of computational capability — faster, more efficient, and more intelligent.

Conclusion: The Trajectory of Computational Power

From the transistor to the neural chip, computing power has followed an exponential trajectory that reshapes technology and society. Parallelism, heterogeneous architectures, AI optimization, cloud and edge computing, and emerging quantum technologies collectively extend human capability.

Yet the future is not without challenges: physical limits, energy consumption, inequality, and ethical considerations must guide innovation. The evolution of computing power is no longer merely a technical pursuit — it is a societal imperative.

As we continue to push the boundaries of computation, one principle remains clear: the ultimate measure of computing power is not how fast machines can calculate, but how effectively they enhance human potential, creativity, and understanding.