Abstract

As artificial intelligence, high-performance computing, and real-time digital services expand across every sector of society, computation itself is transforming into a global utility. The emergence of Global Compute Networks (GCNs)—a distributed mesh of interconnected data centers, edge nodes, cloud fabrics, and specialized accelerators—marks a profound shift in how humanity organizes and consumes computing power. This paper explores the architecture of such networks: their conceptual foundations, technological components, coordination mechanisms, and the economic and political dimensions that underpin them. It also examines major challenges—including latency, security, energy efficiency, and governance—and concludes with a forward-looking perspective on how GCNs might evolve into a planetary-scale digital infrastructure that parallels the importance of the electrical grid.

1. Introduction

For most of the digital era, computing has been localized. Organizations owned servers; users interacted with machines housed in physical facilities. The last two decades, however, have seen the emergence of global cloud platforms—Amazon Web Services, Microsoft Azure, Google Cloud, Alibaba Cloud—offering elastic resources accessible from anywhere. Simultaneously, the rise of artificial intelligence (AI), real-time analytics, metaverse applications, and autonomous systems has caused an unprecedented demand for compute power. In response, the world is witnessing the gradual formation of Global Compute Networks: a distributed infrastructure that integrates cloud data centers, edge nodes, quantum facilities, and specialized accelerators into a unified, intelligent fabric.

Unlike the Internet—which primarily interconnects information—a Global Compute Network interconnects computation. It allows workloads to be dynamically deployed, migrated, and optimized across continents. Such networks will not merely transmit data; they will decide where and how to execute it based on energy availability, latency requirements, and data sovereignty. GCNs therefore represent a foundational shift: compute becomes a utility rather than a localized asset, similar to how electricity became universal during the industrial age.

2. Conceptual Foundations of Global Compute Networks

2.1 From Cloud to Compute Fabric

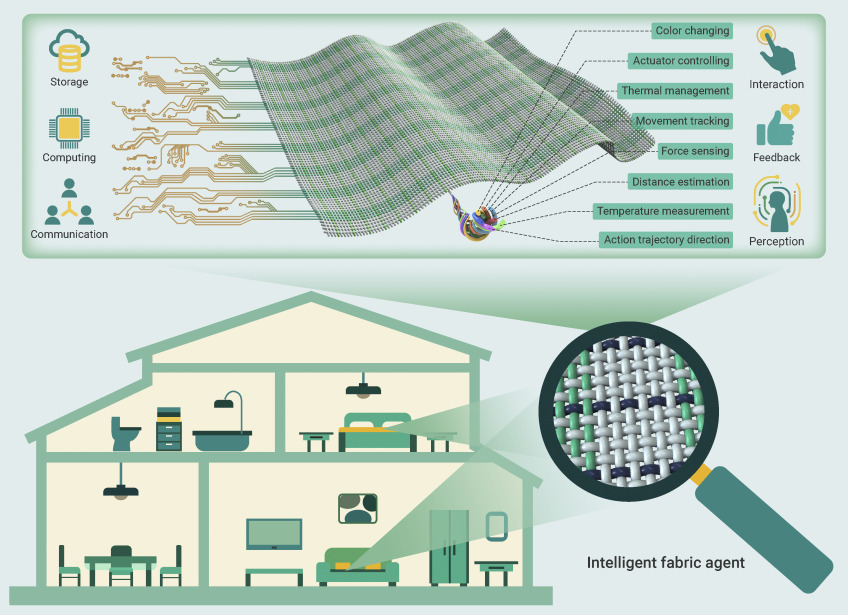

Traditional cloud computing centralizes resources in hyperscale data centers. This model provides economies of scale but introduces latency and energy inefficiencies for distributed applications. Global Compute Networks instead operate as a compute fabric—a fluid continuum that spans the cloud core, intermediate regional hubs, and edge devices embedded in cities, vehicles, and factories. Workloads flow through this fabric, adapting to context. For instance, a self-driving car may process sensor data locally for millisecond decisions but rely on cloud inference for complex model updates.

2.2 The Compute Layer Stack

A GCN can be conceptually described through multiple layers:

- Physical Layer: Data centers, edge servers, networking links, satellites, and undersea cables.

- Virtualization Layer: Containers, hypervisors, and resource abstraction technologies that enable workload mobility.

- Coordination Layer: Software-defined networking (SDN), orchestration tools like Kubernetes, and distributed schedulers managing task allocation across the network.

- Intelligence Layer: AI-driven optimization systems that predict workload demand, energy pricing, and hardware performance to route compute tasks dynamically.

- Application Layer: Services such as AI model training, scientific simulation, video rendering, and blockchain validation.

This layered approach mirrors the OSI model of networking, but it focuses on computation as the fundamental resource.

2.3 Compute as a Global Utility

The vision of “compute as the new electricity” implies universal accessibility, dynamic pricing, and standardized interfaces. Just as the electrical grid balances load across regions, a GCN could distribute computational load according to real-time demand and energy costs. Idle GPUs in one region might be automatically allocated to users on another continent. Achieving this vision requires interoperability standards akin to TCP/IP for data—perhaps an open protocol suite for global compute orchestration.

3. Core Components and Technologies

3.1 Data Centers and the Cloud Core

At the heart of every GCN lies a dense network of hyperscale data centers. These facilities host millions of CPUs, GPUs, TPUs, and emerging AI accelerators. To connect globally, they rely on high-capacity fiber and optical backbones. Innovations such as liquid cooling, AI-based power optimization, and renewable energy integration are central to ensuring sustainability. The trend toward modular and containerized micro-data centers also enables rapid deployment in developing regions, democratizing compute access.

3.2 The Edge Layer

The “edge” refers to computation performed near data sources—industrial sensors, smartphones, drones, or 5G base stations. In a GCN, the edge layer acts as the first hop of computation, reducing latency and bandwidth consumption. Edge nodes can perform pre-processing, inference, or caching, while cloud resources handle model training and large-scale analytics. The convergence of 5G/6G connectivity, AI chips, and low-power hardware makes this possible.

3.3 Networking and Interconnects

High-speed interconnects are the arteries of the GCN. Technologies like optical switching, software-defined wide area networks (SD-WAN), and network function virtualization (NFV) provide flexible routing. Future networks may employ quantum key distribution (QKD) for secure transmission and terabit-class optical channels to support AI-scale workloads. Additionally, satellite constellations (e.g., Starlink, OneWeb) are extending global reach, ensuring even remote regions can participate in the compute economy.

3.4 Orchestration and Scheduling

At the logical core of a GCN lies its orchestration engine. Using frameworks like Kubernetes Federation, Ray, or OpenAI’s internal orchestration tools, workloads can migrate across geographies. Advanced scheduling algorithms consider factors such as:

- Latency to end-users

- Real-time energy pricing

- Carbon footprint

- Hardware availability and health

- Data locality and compliance laws

This “intelligent scheduler” acts like an air traffic controller for computation—continuously matching tasks with optimal resources.

3.5 Energy and Cooling Infrastructure

Computation consumes energy. Today, global data centers account for roughly 2% of world electricity use. In a GCN, efficiency becomes paramount. Emerging strategies include:

- Using AI models to predict and minimize energy peaks.

- Liquid immersion cooling to increase density and reduce waste heat.

- Locating compute clusters near renewable energy sources such as hydroelectric or geothermal plants.

Some researchers envision mobile compute pods that physically migrate toward low-cost, low-carbon energy regions—literally chasing the sun.

4. Software Foundations

4.1 Virtualization and Containerization

Virtualization allows workloads to be decoupled from hardware. Containers like Docker and orchestration frameworks like Kubernetes enable portability and scalability across the global fabric. In a GCN, container migration must be nearly instantaneous, requiring innovations in live state transfer and distributed storage synchronization.

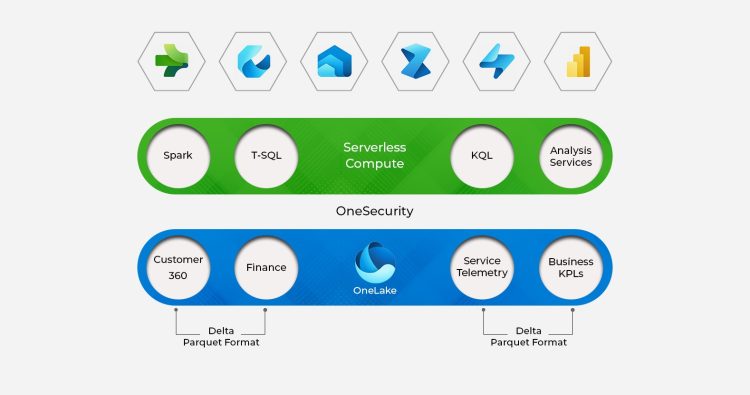

4.2 Distributed File Systems and Data Fabrics

Data is the oxygen of computation. Systems such as Ceph, HDFS, and modern data fabrics like Alluxio provide global data visibility. However, cross-border data replication raises privacy and compliance issues—especially under regulations like GDPR and China’s Data Security Law. Future architectures will likely employ federated data governance, allowing compute mobility without breaching sovereignty.

4.3 AI-Driven Resource Management

AI itself is becoming the operating system of the GCN. Reinforcement learning agents can predict workload surges, identify hardware faults, and optimize power usage dynamically. For example, Google DeepMind’s AI has already reduced data center cooling costs by up to 40%. Extrapolated globally, such systems could make the GCN self-optimizing and largely autonomous.

5. Challenges and Bottlenecks

5.1 Latency and Bandwidth

Despite advances in networking, physical distance still imposes latency limits—light itself takes about 67 milliseconds to circle the Earth. Real-time applications like augmented reality or tele-robotics require responses below 10 milliseconds. Hybrid architectures combining local edge inference with cloud-scale coordination are thus essential.

5.2 Security and Privacy

A global compute mesh dramatically enlarges the attack surface. Threats include side-channel attacks, malicious orchestration commands, and compromised edge devices. Techniques such as trusted execution environments (TEE), zero-trust security frameworks, and confidential computing can help mitigate risks. Encryption alone is insufficient; trust must be distributed.

5.3 Interoperability and Standards

Currently, compute services are largely siloed—AWS workloads cannot seamlessly migrate to Azure or Huawei Cloud. The lack of open standards hinders the formation of a true GCN. Initiatives like GAIA-X (Europe) and the Open Compute Project are early attempts to define interoperable frameworks. The future may demand a “Compute Interconnection Protocol” (CIP), enabling transparent workload mobility just as TCP/IP enabled data exchange.

5.4 Energy Sustainability

Compute expansion risks colliding with planetary energy constraints. AI model training already consumes gigawatt-hours of electricity. Balancing performance and sustainability will require renewable integration, circular hardware economies, and innovations such as photonic computing or neuromorphic chips, which dramatically reduce energy per operation.

5.5 Governance and Policy

Global compute inevitably intersects with geopolitics. Who owns the compute nodes that train the world’s largest AI models? How are cross-border data flows regulated? National sovereignty, export controls, and digital taxation all complicate the picture. Without international governance frameworks, GCNs risk fragmenting into regional “compute blocs,” echoing Cold War divisions.

6. Opportunities and Emerging Directions

6.1 Compute Marketplaces

A mature GCN could enable compute marketplaces where individuals and organizations trade spare resources. Decentralized protocols like Ethereum, Filecoin, or Golem already experiment with this model. In the future, compute might become tokenized, allowing microtransactions for milliseconds of GPU time—creating a truly global compute economy.

6.2 AI-Native Compute Fabrics

As AI models increasingly dominate workloads, hardware and network architectures are being redesigned around them. Specialized interconnects like NVIDIA NVLink or Cerebras Swarm facilitate low-latency tensor communication. GCNs could evolve into AI-native fabrics that natively support distributed model training, inference sharing, and continual learning across the globe.

6.3 Integration with Quantum and Neuromorphic Systems

The coming decades may see hybrid networks where quantum computers handle specific subproblems (e.g., optimization, cryptography) and classical nodes perform large-scale AI inference. Similarly, neuromorphic chips could deliver event-driven energy efficiency. The GCN will thus become heterogeneous by design, blending multiple computational paradigms.

6.4 Compute and the Planetary Nervous System

Viewed philosophically, a Global Compute Network is the foundation of a planetary nervous system—a distributed intelligence sensing and responding to global conditions. From climate modeling to pandemic forecasting, this infrastructure could coordinate responses in near real time. Yet such power also invites ethical scrutiny: who controls the brain of the planet?

7. Case Studies

7.1 The European GAIA-X Project

GAIA-X aims to build a federated cloud and data infrastructure based on openness, transparency, and sovereignty. Instead of a centralized provider, it coordinates European data centers under common interoperability and security standards. GAIA-X demonstrates how regional cooperation can lay the groundwork for a broader global network.

7.2 NVIDIA DGX Cloud and Compute-as-a-Service

NVIDIA’s DGX Cloud integrates global GPU clusters accessible via subscription. It abstracts away infrastructure, enabling instant scaling for AI training. This approach exemplifies how vendors are moving toward “Compute-as-a-Service” models that unify resources across borders.

7.3 China’s National Integrated Computing Power Network (国家一体化算力网络)

China’s initiative to link data centers nationwide—spanning regions like Guizhou, Inner Mongolia, and the Yangtze Delta—illustrates how national-level GCNs can reduce energy imbalance and boost industrial AI deployment. Similar models may emerge elsewhere as countries pursue digital sovereignty.

8. Ethical and Societal Implications

A planetary-scale compute fabric raises profound questions. If computation becomes as essential as water or electricity, should it be treated as a public good? How can we prevent monopolization of compute by a few tech giants? Ensuring equitable access, transparency, and sustainability will be as important as technical performance.

Furthermore, GCNs concentrate immense informational power. Whoever controls global scheduling algorithms could influence which AI models thrive, what simulations run, and whose data is prioritized. Ethical governance—combining technical safeguards with democratic oversight—will be crucial to ensure that compute serves humanity rather than dominating it.

9. The Road Ahead

The next decade will likely see the gradual materialization of the Global Compute Network through several parallel evolutions:

- Interoperability Standards – Emergence of open protocols for workload mobility.

- AI-Driven Autonomy – Self-managing compute fabrics reducing human intervention.

- Green Compute Integration – Alignment with renewable grids and circular hardware systems.

- Regional Federations – National and continental initiatives merging into a global mesh.

- Quantum and Neuromorphic Fusion – Expansion beyond silicon-based computing.

Just as the Internet’s early architects could not foresee today’s digital economy, the full impact of GCNs will only become visible decades later. Yet their trajectory is clear: computation is escaping the boundaries of data centers to become an omnipresent, planetary-scale fabric.

10. Conclusion

The architecture of Global Compute Networks represents the next evolutionary step in human technological infrastructure. By interconnecting compute resources worldwide, GCNs promise to deliver near-infinite scalability, efficiency, and resilience. They blur the lines between cloud and edge, public and private, physical and virtual. Their success, however, depends on solving immense challenges: ensuring interoperability, sustainability, and equitable governance.

If achieved, the GCN will be more than a technical network—it will be the digital circulatory system of civilization, powering science, creativity, and collective intelligence across the planet. Just as electricity transformed the 20th century, global compute networks may well define the 21st.