Abstract

The convergence of artificial intelligence (AI) and edge computing is reshaping the architecture of global computation. As the world moves toward distributed intelligence, computation is no longer confined to centralized cloud data centers but flows dynamically through a continuum of edge devices, regional nodes, and global networks. This paper explores how AI and edge computing collaborate within the emerging Global Compute Fabric (GCF)—an interconnected ecosystem that balances latency, efficiency, privacy, and intelligence distribution. It examines key technologies, architectures, challenges, and opportunities underpinning this collaboration, and argues that the fusion of AI and edge computing will define the operational nervous system of the 21st-century digital planet.

1. Introduction

The early Internet era centralized intelligence: data flowed upward to massive servers where processing occurred, and results were transmitted back to users. However, the explosion of smart devices, real-time applications, and autonomous systems has outgrown this model. Billions of sensors now generate continuous streams of data that cannot feasibly be sent to distant data centers for processing due to latency, bandwidth, and privacy constraints.

At the same time, artificial intelligence has become the dominant mode of computation. From language models to industrial automation, AI requires immense compute power and fast feedback loops. The need to bring intelligence closer to data gave rise to edge computing. Yet, AI at the edge cannot thrive in isolation; it must connect with cloud and supercomputing resources for training, coordination, and global optimization.

The synthesis of these two paradigms—AI and edge computing—forms the backbone of what we now call the Global Compute Fabric. This fabric acts as a seamless continuum where computation flows to where it is needed most: at the edge for immediacy, in the cloud for scale, and across the network for coordination. Understanding this collaboration is key to building resilient, efficient, and intelligent digital infrastructure for the future.

2. The Global Compute Fabric: A New Computational Paradigm

2.1 From Hierarchies to Continuums

Traditional computing architectures followed a hierarchical model: sensors → gateways → cloud. Each layer had defined roles. In the Global Compute Fabric, these layers blur into a continuum. Computation is no longer statically assigned but dynamically orchestrated across a distributed topology based on real-time context. AI models can migrate between edge, fog, and cloud depending on latency, energy, and network conditions.

2.2 The Role of Collaboration

The essence of the GCF lies in collaboration: cloud and edge nodes cooperate rather than compete. The edge handles immediate, localized inference, while the cloud provides heavy computation for training, aggregation, and long-term optimization. The communication between them is continuous and adaptive, forming a cyber-physical ecosystem reminiscent of the brain’s interplay between neurons (edge) and central cognition (cloud).

2.3 Edge Intelligence as a Foundation of the Digital World

By 2030, it is estimated that over 75 billion connected devices will generate more than 175 zettabytes of data annually. Without distributed intelligence at the edge, this data would overwhelm networks and data centers. The GCF, powered by AI, turns raw data into actionable insight near its source—creating an efficient, privacy-preserving, and responsive computing environment.

3. Core Technologies Enabling AI–Edge Collaboration

3.1 Distributed AI Models

In the GCF, AI models are decomposed and distributed across nodes. Techniques such as federated learning, split learning, and edge model distillation allow training and inference to occur collaboratively without moving raw data. Federated learning, pioneered by Google, trains models locally on devices while aggregating updates globally—preserving privacy while maintaining model quality.

Split learning divides the model into segments, executed partly on edge and partly on cloud. This hybrid execution optimizes resource utilization while keeping sensitive data local. Model distillation, on the other hand, allows complex “teacher” models trained in the cloud to generate lighter “student” models deployable on edge devices.

3.2 Edge AI Hardware

Hardware advancements are vital for AI–edge synergy. Specialized chips—NVIDIA Jetson, Google Coral TPU, Apple Neural Engine, Huawei Ascend, and Qualcomm AI Engine—enable deep learning inference with minimal power consumption. These chips often integrate tensor cores, neural accelerators, and embedded memory for low-latency operation.

Emerging neuromorphic processors, such as Intel’s Loihi and IBM’s TrueNorth, mimic biological neurons and can perform real-time pattern recognition using a fraction of the energy of traditional architectures. As these technologies mature, they will underpin the “neural layer” of the global compute fabric.

3.3 Edge-Orchestrated Networking

For true collaboration, networking itself must become intelligent. Software-defined networking (SDN) and network slicing in 5G/6G environments allow the dynamic allocation of bandwidth and latency profiles to edge AI workloads. A self-driving car, for instance, may reserve ultra-low-latency channels for sensor fusion while streaming less critical analytics over standard connections.

Future networks may employ AI-driven routing algorithms that predict congestion, energy usage, and compute availability, dynamically reconfiguring the topology of the GCF in real time.

3.4 TinyML and On-Device Learning

A key enabler of edge AI is Tiny Machine Learning (TinyML)—the optimization of deep learning models to run on microcontrollers with kilobytes of memory. Combined with on-device learning, TinyML allows localized adaptation without cloud dependence. For example, a wearable health sensor can learn individual physiological patterns, providing personalized analytics without transmitting private data.

4. The Cloud–Edge Continuum

4.1 Cloud Strengths: Scale and Central Intelligence

Cloud computing remains indispensable for large-scale model training, global coordination, and data aggregation. AI systems such as GPT, Gemini, or Claude require exascale GPU clusters for training, impossible to replicate at the edge. The cloud thus serves as the “central brain” of the GCF—learning global patterns and disseminating knowledge to edge nodes.

4.2 Edge Strengths: Immediacy and Privacy

The edge excels in real-time responsiveness. Applications such as autonomous driving, industrial robotics, AR/VR, and remote surgery cannot tolerate cloud latency. Edge devices can infer locally within milliseconds. Furthermore, because data remains close to its source, privacy and regulatory compliance are enhanced—a critical factor under laws like GDPR or China’s Data Security Act.

4.3 The Fog Layer: Bridging Cloud and Edge

Between cloud and edge lies the fog layer: intermediate nodes like regional data centers, base stations, and gateways. Fog computing reduces latency while maintaining broader visibility than individual edge devices. It acts as a coordination layer—aggregating data, caching models, and handling regional optimization.

4.4 Workload Orchestration

AI-driven orchestration platforms (KubeEdge, Open Horizon, Azure Arc) manage where and when computation occurs. They continuously evaluate conditions—network bandwidth, energy cost, hardware load, and latency sensitivity—to dynamically offload tasks. This fluid mobility of workloads transforms the GCF into a self-regulating organism.

5. Real-World Applications

5.1 Autonomous Mobility

Self-driving cars, drones, and smart logistics systems epitomize AI–edge collaboration. Each vehicle functions as an edge node, performing local sensor fusion and decision-making, while the cloud aggregates fleet-wide data for global model updates. Tesla’s shadow mode and Mobileye’s crowdsourced mapping illustrate how local intelligence and global learning coevolve.

5.2 Smart Manufacturing and Industry 4.0

In industrial environments, latency and reliability are critical. Edge AI systems monitor machinery, detect anomalies, and optimize production lines in real time. The cloud component conducts predictive maintenance analysis and cross-site optimization. Companies like Siemens and Bosch deploy industrial edge platforms integrating AI inference with cloud analytics.

5.3 Healthcare and Personalized Medicine

Edge AI enables privacy-preserving health monitoring. Wearables and medical sensors analyze signals locally, sending only anonymized summaries to the cloud. During the COVID-19 pandemic, distributed AI frameworks helped track infections and optimize hospital resources while maintaining patient confidentiality. Future telemedicine systems will rely on GCF integration for diagnosis, treatment, and health prediction.

5.4 Smart Cities and Infrastructure

Urban systems—traffic control, energy management, waste recycling—depend on massive sensor networks. AI at the edge manages immediate responses, such as rerouting traffic lights or balancing microgrids. The cloud aggregates these insights to optimize citywide planning. Singapore’s Smart Nation and China’s City Brain projects showcase early forms of GCF-driven governance.

5.5 Environmental Monitoring

Distributed AI sensors can detect deforestation, pollution, and climate anomalies in real time. By processing data locally via solar-powered edge devices, environmental monitoring becomes more sustainable. The cloud aggregates findings to feed climate models, forming a planetary-scale feedback system—a digital “nervous system” for Earth.

6. Technical and Architectural Challenges

6.1 Latency and Bandwidth Trade-offs

Despite advances in 5G/6G, bandwidth remains limited in many regions. Determining the optimal division of computation between edge and cloud is a nontrivial problem. Excess offloading can create bottlenecks; too much local processing can drain power and reduce model quality. AI-based schedulers that predict workloads and adapt dynamically are essential.

6.2 Model Synchronization and Drift

As edge devices operate autonomously, their local models may diverge from the global version—a phenomenon known as model drift. Maintaining consistency across millions of nodes requires efficient synchronization protocols and adaptive federated algorithms that balance convergence speed and bandwidth cost.

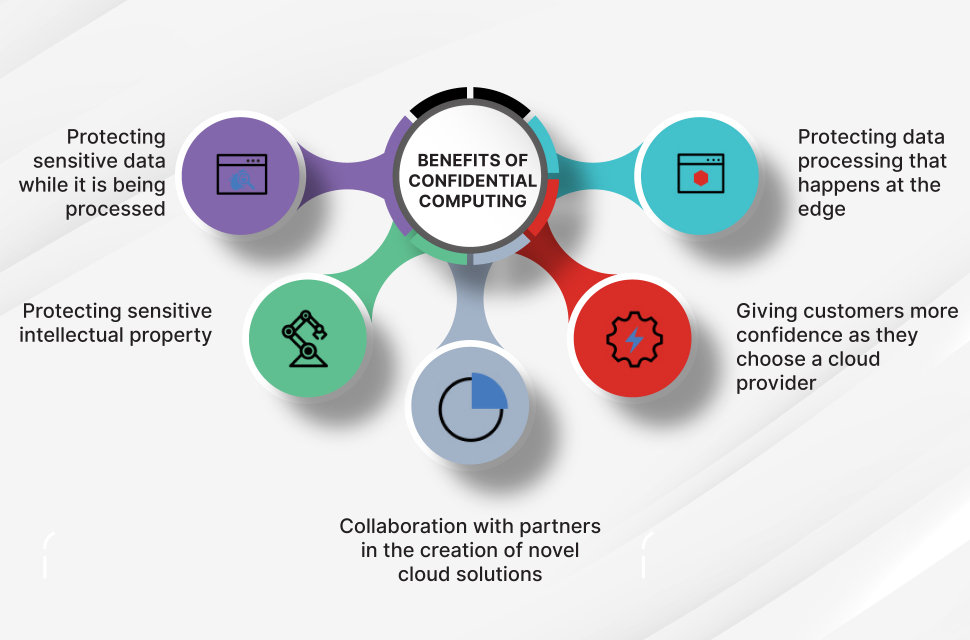

6.3 Security and Privacy

Edge devices are often physically exposed and vulnerable to tampering. Attackers may insert poisoned data or compromise local models. Federated learning introduces new attack surfaces such as model inversion and gradient leakage. Defense mechanisms include secure enclaves, homomorphic encryption, and blockchain-based verification to ensure trust in distributed training.

6.4 Resource Constraints

Edge devices operate under strict power, memory, and computational limits. Deploying large AI models requires aggressive quantization, pruning, and compression techniques. Recent advances in dynamic neural networks—which activate only relevant parts of a model per input—help mitigate this limitation.

6.5 Management Complexity

Coordinating billions of heterogeneous devices introduces unprecedented complexity. Unlike centralized cloud systems, the GCF must handle intermittent connectivity, varying hardware, and dynamic topology. Self-organizing orchestration frameworks using reinforcement learning are emerging to automate these management tasks.

7. Energy and Sustainability Considerations

AI–edge collaboration is not only about performance but also sustainability. Centralized AI training consumes gigawatt-hours of energy; distributing workloads intelligently can reduce carbon footprints dramatically.

7.1 Edge Energy Optimization

Edge nodes can operate on renewable energy or recover waste heat locally. AI algorithms optimize power usage by adjusting model precision or activation frequency. For example, sensors in remote areas may enter “sleep” modes guided by predictive AI, waking only when events are likely.

7.2 Cloud–Edge Energy Coordination

The GCF can perform energy-aware scheduling: workloads migrate to nodes powered by renewable sources or located in cooler climates. This concept—sometimes called “follow the green compute”—aligns computational demand with sustainable supply, turning the GCF into a global balancing mechanism between energy and intelligence.

8. Security, Trust, and Governance

8.1 Zero-Trust Edge Architecture

Given the distributed nature of edge systems, traditional perimeter-based security is obsolete. A zero-trust model verifies every device, user, and data transaction continuously. AI plays a dual role—both as target and protector—since adversarial attacks can manipulate models, while AI-based intrusion detection can defend against them.

8.2 Privacy-Preserving AI

Techniques such as differential privacy, secure multiparty computation (MPC), and homomorphic encryption allow learning from distributed data without revealing sensitive information. These tools are essential for sectors like healthcare and finance, where regulatory compliance is strict.

8.3 Governance in a Distributed Fabric

No single entity can govern the entire GCF. Instead, a federated governance model—similar to Internet governance—is required. Standards bodies like IEEE, ISO, and W3C may define interoperability and ethical guidelines, while national regulators ensure compliance with local laws. Trust frameworks and audit trails based on blockchain could provide transparency and accountability.

9. The Economic Dimension of AI–Edge Collaboration

9.1 Decentralized Compute Markets

Edge nodes owned by individuals or companies can rent out spare capacity via decentralized marketplaces. AI-driven platforms match supply and demand in real time, creating a distributed “compute economy.” This democratizes access to AI infrastructure and reduces dependence on hyperscalers.

9.2 Cost Optimization and Local Empowerment

Running inference locally often reduces operational costs by minimizing data transfer and cloud usage fees. For developing nations, local edge compute clusters offer a path to digital sovereignty—retaining data and value creation domestically while still connecting to global intelligence networks.

9.3 New Business Models

The fusion of AI and edge computing enables new service models: AI-as-a-Utility, Sensing-as-a-Service, and Micro-Inference-as-a-Service. A logistics company might pay per kilometer of real-time analytics, while a city could subscribe to “intelligence coverage” zones rather than physical servers. The GCF thus supports a shift from ownership to usage-based intelligence economies.

10. Future Research Directions

10.1 Continual and Federated Learning

AI models must evolve continuously as the environment changes. Continual learning allows adaptation without catastrophic forgetting, while federated continual learning enables global coordination without centralized data collection. Research is ongoing into algorithms that maintain global coherence while respecting local dynamics.

10.2 Cognitive Edge Systems

Future edge devices may exhibit cognitive capabilities: reasoning, planning, and social interaction. These “cognitive edges” will collaborate autonomously—forming emergent swarms capable of self-organization. The combination of edge cognition and global oversight could create a distributed collective intelligence reminiscent of biological ecosystems.

10.3 Integration with Quantum and Neuromorphic Computing

Quantum computing could augment cloud-level optimization, solving complex scheduling and encryption tasks, while neuromorphic chips handle sensory processing at the edge. This hybrid model would extend the GCF beyond classical computing paradigms into new physical frontiers of intelligence.

10.4 Ethical AI at the Edge

Embedding ethics directly into edge AI—through explainability, fairness, and transparency—will become essential. Decentralized governance frameworks could enable communities to define local ethical parameters while adhering to global standards, ensuring that intelligence remains both autonomous and accountable.

11. Case Studies

11.1 Amazon AWS Greengrass

AWS Greengrass enables IoT devices to run AWS Lambda functions locally, performing inference even when offline. It synchronizes results with the cloud when connectivity resumes—an early example of adaptive cloud–edge collaboration in industrial and consumer contexts.

11.2 Huawei Atlas and Ascend Ecosystem

Huawei’s Atlas AI edge platform integrates Ascend chips with an orchestration framework that bridges edge, fog, and cloud. It supports real-time video analytics for smart cities and transportation systems, exemplifying large-scale GCF deployment under data sovereignty constraints.

11.3 NVIDIA Metropolis

NVIDIA’s Metropolis platform brings AI to the edge for video surveillance, traffic control, and retail analytics. By leveraging GPU acceleration and centralized management, it demonstrates the practical benefits of distributed intelligence.

11.4 Open Source: EdgeX Foundry and LF Edge

The Linux Foundation’s LF Edge initiative promotes open standards for edge computing. Projects like EdgeX Foundry create interoperable frameworks allowing devices, clouds, and AI services from different vendors to collaborate within a unified global fabric.

12. Philosophical and Societal Implications

The fusion of AI and edge computing represents more than a technical evolution—it marks the birth of distributed cognition. Humanity is building an externalized nervous system, with sensors and processors woven through the environment. This pervasive intelligence blurs boundaries between human and machine, private and public, digital and physical.

Ethically, it raises questions: Who owns the edge intelligence generated by billions of devices? Can autonomous edge agents make moral decisions? How do we ensure transparency in systems too large for human comprehension? Addressing these questions is as vital as solving the engineering challenges.

13. Conclusion

AI and edge collaboration within the Global Compute Fabric is transforming computation from a centralized service into a living, distributed ecosystem. Together, they bring intelligence closer to the world—reducing latency, preserving privacy, and optimizing energy. The GCF enables the seamless flow of cognition across scales: from a sensor in a factory to an AI supercomputer analyzing global trends.

The challenges remain formidable—synchronization, sustainability, security—but the trajectory is clear. The 21st century will witness the rise of an intelligent planetary infrastructure, where every object, device, and network node participates in shared computation. This is not merely an evolution of technology but the emergence of a new layer of consciousness woven into the fabric of civilization.