Introduction: From Automation to Coexistence

For much of the technological era, automation has been perceived as a replacement for human labor — machines taking over repetitive, dangerous, or inefficient tasks. Yet, as artificial intelligence and robotics advance toward full autonomy, a new paradigm is emerging: collaboration rather than substitution.

We are entering an age of human–autonomy symbiosis, where intelligent systems do not merely execute orders but learn, reason, and act alongside humans. From factories and hospitals to homes and creative industries, autonomous systems are becoming teammates, not tools. They augment human capabilities, compensate for our limitations, and, in some cases, challenge us to redefine what it means to be human.

This essay explores how collaboration between humans and autonomous systems is reshaping work, creativity, ethics, and society. It argues that the future will not belong to either humans or machines alone, but to the partnerships forged between them — relationships grounded in trust, empathy, and shared intelligence.

1. Redefining Collaboration in the Age of Autonomy

1.1 From Human Supervision to Mutual Adaptation

Traditional automation required human oversight — a person monitored the machine, correcting errors when necessary. But in autonomous systems, the relationship is more fluid. Machines now anticipate human needs, adapt to behavior, and even make decisions that influence human action.

This mutual adaptation transforms the human–machine interface from one of command to one of cooperation. In industrial robotics, for example, cobots (collaborative robots) share workspace with humans, adjusting their movements in real time to ensure safety and efficiency. In healthcare, autonomous assistants monitor patients’ vitals and alert doctors before crises occur.

1.2 The Psychology of Trust and Control

For collaboration to succeed, humans must trust machines — but not blindly. Too little trust leads to rejection of automation; too much leads to complacency and risk. Designing for calibrated trust requires transparency, predictability, and reliability.

Research in human–computer interaction shows that users trust systems more when they understand how they make decisions. Explainable AI, visual feedback, and adaptive interfaces all help bridge the psychological gap between human intuition and algorithmic reasoning.

1.3 Symbiotic Design Principles

Symbiotic collaboration relies on three core principles:

- Transparency: The system must communicate its goals and reasoning clearly.

- Adaptability: It should learn from human behavior and adjust dynamically.

- Complementarity: Tasks should be distributed based on strengths — humans provide context, emotion, and moral reasoning, while machines contribute precision, speed, and data analysis.

Together, these principles form the foundation of ethical and efficient human–autonomy systems.

2. Collaborative Intelligence: When Humans and Machines Think Together

2.1 Beyond Artificial Intelligence: Toward Collective Intelligence

The future of AI is not artificial, but collective. Collaborative intelligence (CI) refers to the integration of human creativity with machine computation to solve problems neither could address alone.

For example, in drug discovery, AI algorithms can analyze millions of compounds, but scientists interpret biological meaning and guide experiments. In journalism, AI assists with data visualization and fact-checking, allowing reporters to focus on storytelling and analysis.

2.2 Co-Creation in Art and Design

Autonomous systems are increasingly participating in creative processes once considered uniquely human. Generative AI tools compose music, design architecture, and paint artworks that provoke aesthetic and philosophical debate.

Yet, the most powerful creations often emerge from co-creation — human artists guiding AI tools to amplify imagination. The role of the creator evolves from sole author to curator of intelligence, orchestrating both human emotion and machine computation.

2.3 Collaborative Problem-Solving and Decision-Making

In complex domains like climate modeling or financial forecasting, human–autonomy collaboration enhances decision-making. Machines process immense data sets; humans interpret uncertainty and make value-laden judgments. This interplay between computation and conscience will define the governance of future autonomous societies.

3. The Workplace of the Future: Coexisting with Intelligent Systems

3.1 From Automation Anxiety to Augmentation Mindset

The narrative of automation as a job destroyer dominates popular discourse. Yet historical evidence suggests that while technology displaces certain roles, it also creates new ones. The key shift lies in mindset: from fearing replacement to embracing augmentation.

In manufacturing, robots perform high-precision tasks while humans handle design and quality oversight. In healthcare, AI diagnoses diseases, but empathy and human connection remain irreplaceable. The workplace of the future will demand “hybrid skills” — combining technical fluency with emotional intelligence.

3.2 Human Skills That Endure

Autonomous systems excel at pattern recognition and optimization, but they lack intrinsic motivation, empathy, and moral intuition. Therefore, the skills most resistant to automation include:

- Emotional intelligence (empathy, communication, negotiation)

- Creative problem-solving (imagination, divergent thinking)

- Ethical reasoning (understanding fairness and responsibility)

- Contextual awareness (social, cultural, and environmental insight)

Rather than compete with machines, future workers will learn to amplify these human strengths through collaboration.

3.3 Hybrid Teams and Organizational Transformation

Forward-looking companies are already experimenting with hybrid human–AI teams. In logistics, AI predicts demand while humans handle exceptions. In law, AI reviews documents while lawyers focus on argumentation. Leadership, too, will evolve — from command-and-control to orchestration, guiding dynamic ecosystems of human and artificial collaborators.

4. Ethics of Collaboration: Mutual Dependence and Moral Design

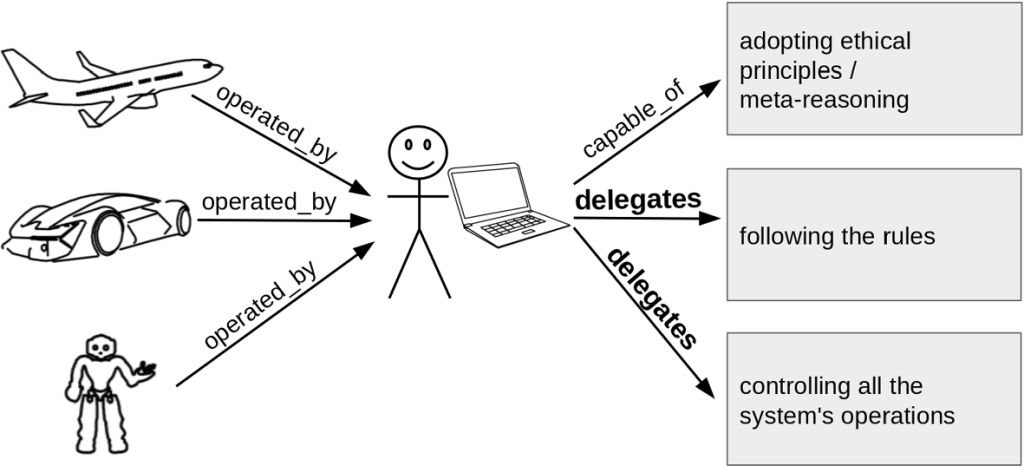

4.1 Responsibility in Shared Decision-Making

When humans and autonomous systems collaborate, responsibility becomes diffuse. If a medical AI misdiagnoses a patient, who is accountable — the developer, the doctor, or the algorithm? Ethical governance must establish frameworks for shared accountability and traceable decision logs to ensure fairness and transparency.

4.2 The Risk of Cognitive Offloading

As machines take over cognitive tasks, humans risk losing critical thinking skills. Studies show that overreliance on automation can lead to “automation bias,” where users defer to machine outputs even when incorrect. True symbiosis requires designing systems that challenge human users to remain engaged and reflective.

4.3 Empathy in Design

Collaboration is not merely functional; it is emotional. Systems that respond to tone, gesture, and mood foster deeper connection and trust. Research in affective computing aims to give machines the ability to interpret human emotions—not to simulate empathy, but to support it.

An empathetic AI nurse, for example, may detect stress in a patient’s voice and notify a doctor before symptoms worsen. Ethical collaboration demands that such empathy be used to empower, not manipulate.

5. Designing for Symbiosis: Architecture of the Future

5.1 Cognitive Interfaces and Adaptive Environments

The next generation of collaboration will move beyond screens and keyboards. Brain–computer interfaces (BCIs), augmented reality (AR), and multimodal sensors will blur the boundaries between human cognition and machine computation.

Imagine a surgeon using AR overlays powered by autonomous imaging systems, or an engineer controlling drones through neural feedback. These interfaces represent not just technological tools but extensions of human agency.

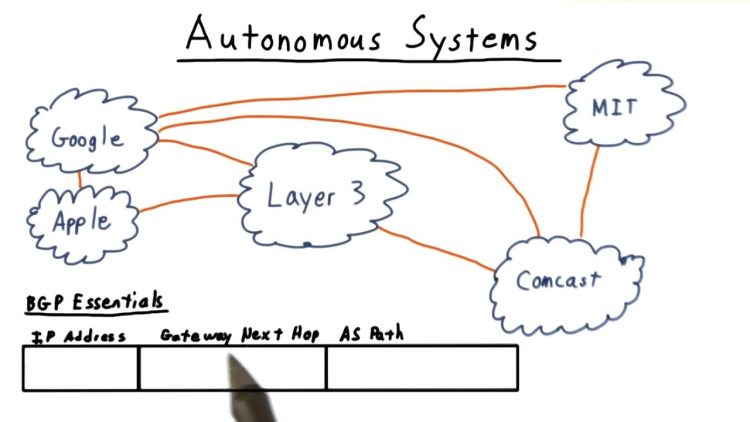

5.2 Autonomous Ecosystems and the Internet of Collaboration

As billions of devices gain autonomy, collaboration will extend into distributed networks. Smart cities, for instance, will coordinate transportation, energy, and healthcare systems in real time. Humans will interact with entire ecosystems of autonomy rather than individual machines.

This interconnected web—sometimes called the Internet of Collaboration—demands governance models that balance efficiency with privacy and inclusivity.

5.3 The Role of Design in Shaping Behavior

Design decisions encode values. Whether an AI assistant prioritizes convenience or privacy reflects human intention. Designers must recognize their ethical agency, ensuring that symbiotic systems enhance human dignity rather than erode it.

6. The Social Dimension: Culture, Inclusion, and Accessibility

6.1 Inclusive Design and Accessibility

Autonomous technologies hold immense potential to empower people with disabilities and older adults. Voice assistants, smart prosthetics, and self-driving wheelchairs enhance independence and participation in society. Designing for inclusion must be a moral imperative, not an afterthought.

6.2 Cultural Coevolution

Different societies will integrate autonomy differently, reflecting their cultural values. In collectivist cultures, AI may be viewed as a communal aid; in individualistic cultures, as a personal assistant. Understanding these cultural contexts ensures that collaboration remains meaningful and respectful worldwide.

6.3 Education for the Symbiotic Era

Future education must prepare individuals not just to use technology, but to coexist with it. Curricula should integrate ethics, digital literacy, and systems thinking—teaching students to question, interpret, and collaborate with intelligent systems critically.

7. The Philosophy of Human–Machine Coevolution

7.1 The Cyborg Metaphor Revisited

Philosopher Donna Haraway’s Cyborg Manifesto envisioned humans and machines merging symbolically and biologically. Today, this metaphor is materializing in prosthetics, neural implants, and wearable AI. We are not replacing humanity with technology; we are expanding it.

7.2 Autonomy as Shared Consciousness

As systems learn from human feedback, and humans rely on machine augmentation, a kind of shared cognition emerges—a “networked consciousness” distributed across biological and digital nodes. This does not diminish individuality; it enhances collective intelligence.

7.3 The Ethical Frontier: What Does It Mean to Be Human?

When creativity, empathy, and reasoning are shared between human and machine, traditional definitions of humanity evolve. Perhaps what defines us is not exclusivity of intelligence, but our capacity for moral imagination—to create and collaborate with empathy and foresight.

8. Governance and the Future of Collaboration

8.1 Co-Governance Models

Governance of human–autonomy collaboration must move beyond regulation toward co-governance. Humans, machines, and institutions share adaptive roles in shaping ethical standards. Machine-learning systems might monitor bias in real time, while human oversight ensures moral alignment.

8.2 Democratizing Autonomy

Access to autonomous technology must be equitable. Without inclusive policies, the benefits of collaboration could deepen digital divides. Public governance should ensure that AI-enhanced productivity and creativity are distributed across societies, not concentrated in a few corporations.

8.3 The Evolution of Rights and Agency

As autonomous agents act with increasing independence, society may need to redefine rights—not in the sense of “robot personhood,” but of relational accountability. What rights do humans have over autonomous systems, and what obligations do designers have toward them? These questions will define the legal and ethical architecture of the next century.

Conclusion: Designing Symbiotic Futures

The future of autonomy is not about domination or displacement—it is about partnership.

Autonomous systems will reshape work, creativity, and ethics, but their ultimate value lies in how they empower human flourishing. Collaboration, not competition, is the key to unlocking that potential.

We must design a world where machines amplify human imagination, where algorithms are transparent and just, and where technology serves as an ally in the pursuit of collective well-being.

Human–autonomy collaboration represents more than an engineering challenge; it is a civilizational project. It asks us to reimagine the boundaries of identity, agency, and cooperation.

If we can align intelligence—both human and artificial—with compassion and wisdom, we may yet build not just smart systems, but a wise civilization.