Introduction: The Human Story Behind Technology

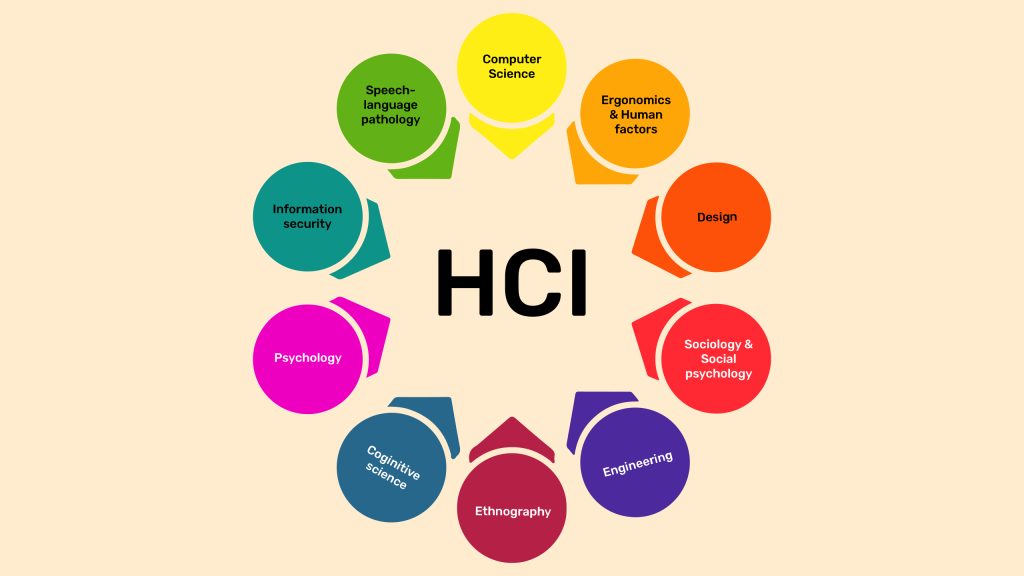

Human–Computer Interaction (HCI) is not merely a field of technology — it is a narrative about how humans learn to communicate with their creations. From the earliest punch cards to today’s emotion-aware interfaces, the evolution of HCI mirrors the evolution of human thought, perception, and social behavior. At its core, HCI explores how we can make machines understand humans — not only through commands but through context, emotion, and intuition.

This essay traces the journey of HCI across four major stages: the command age, the graphical revolution, the intelligent interface, and the empathic machine. Along the way, it examines how interaction design has transformed from mechanical efficiency to emotional connection, redefining what it means to “communicate” with technology.

1. The Command Age: Humans Speak Machine Language

1.1 The Early Mechanical Dialogue

In the mid-20th century, computing was an esoteric craft. Interaction meant typing cryptic instructions into black screens — a far cry from the intuitive interfaces of today. Early computers like the ENIAC or IBM 1401 required users to speak in machine syntax — sequences of codes and commands — where even a single misplaced symbol could cause failure.

Interaction was one-directional: the human issued orders; the computer executed them without interpretation. This stage reflected a power dynamic where humans bent themselves to the logic of the machine. The goal was not “user experience” but raw computational control.

Yet, in this primitive conversation, the foundations of HCI were laid. Researchers began asking: Could computers adapt to human thought instead of forcing humans to think like computers?

1.2 The Rise of Usability and Human Factors

By the 1960s and 1970s, pioneers like Douglas Engelbart and J.C.R. Licklider envisioned computers as “intelligence amplifiers” rather than mere calculators. Engelbart’s invention of the mouse and the concept of hypertext transformed how humans could interact with digital information.

The emerging field of human factors engineering — borrowed from aviation and ergonomics — gave birth to the first usability principles: clarity, efficiency, and feedback. The question was no longer “How can humans adapt to computers?” but “How can computers serve human goals?”

2. The Graphical Revolution: From Codes to Icons

2.1 The Birth of the GUI

The 1980s marked a turning point with the Graphical User Interface (GUI), pioneered at Xerox PARC and later popularized by Apple Macintosh and Microsoft Windows. The GUI translated abstract code into visual metaphors — folders, desktops, windows, and icons — making interaction more intuitive and human-like.

This shift was revolutionary because it humanized computing. Instead of memorizing commands, users could now “see” and “touch” digital environments. The mouse, once a laboratory curiosity, became an everyday tool of communication between human intention and machine execution.

2.2 Cognitive Models and User Experience

As personal computing spread, researchers began exploring how users think while interacting with machines. The Model Human Processor (MHP) by Card, Moran, and Newell (1983) modeled humans as information processors — with perceptual, cognitive, and motor subsystems — to predict how users interact with interfaces.

This cognitive approach led to the emergence of User Experience (UX) design — the practice of optimizing satisfaction, efficiency, and emotional resonance. HCI was no longer just about using computers but about experiencing them. Designers began to treat users as emotional, social beings rather than rational operators.

3. The Intelligent Interface: When Computers Learn to Predict

3.1 The Shift Toward Adaptive Systems

By the early 2000s, advances in machine learning and big data enabled computers to anticipate user needs. Interfaces became adaptive, adjusting layouts, recommendations, and responses based on behavior.

Search engines predicted queries; smartphones auto-corrected words; virtual assistants like Siri, Alexa, and Google Assistant engaged in dialogue. The machine was no longer passive — it observed, learned, and responded.

This marked the rise of context-aware computing, where interaction depended not just on input but on situation — location, time, emotion, and history. The line between user and interface began to blur as systems started “understanding” users implicitly.

3.2 Natural Interaction: Voice, Gesture, and Beyond

The next leap came with natural user interfaces (NUIs) — systems designed to align with human intuition. Voice recognition, gesture control, and touchscreens allowed users to interact through instinctive behavior rather than artificial commands.

For example, swiping to unlock a smartphone mimics physical motion; speaking to an AI mirrors conversation; gesturing in VR mirrors embodied cognition. These forms of interaction draw from cognitive linguistics and embodied psychology, suggesting that the most natural interfaces are those that mirror how humans already perceive and act in the world.

4. Emotion-Aware Interfaces: Technology Learns to Feel

4.1 The Rise of Affective Computing

In the 2010s, HCI entered a new dimension: emotion recognition. Coined by Rosalind Picard, affective computing aims to endow machines with the ability to detect, interpret, and respond to human emotions. Using sensors, facial recognition, voice tone analysis, and physiological signals, systems can now infer emotional states — from joy to frustration — and adjust responses accordingly.

Applications range from mental health monitoring and customer service to educational AI that adapts to students’ moods. For instance, a tutoring system might slow its pace if it detects confusion or stress.

4.2 Empathy in Design

The challenge is not just detecting emotion but responding ethically and empathetically. Designers now focus on empathic interfaces — systems that mirror human emotional intelligence.

Empathy in HCI involves understanding users’ unspoken needs and values. It requires ethical sensitivity, ensuring that emotional data is used to empower rather than manipulate. For example, emotion-aware advertising risks exploitation, while therapeutic robots like PARO or ElliQ demonstrate compassionate application.

In this stage, interaction becomes relational rather than functional. The computer is not just a tool — it becomes a partner in emotional and cognitive co-existence.

5. The Expanding Definition of Interaction

5.1 From Interface to Relationship

The more technology integrates into daily life, the less visible it becomes. Smart homes, wearables, and the Internet of Things (IoT) dissolve the boundary between human and machine. The “interface” no longer sits on a screen but surrounds us — embedded in environments, clothing, and even bodies.

Interaction now encompasses ambient intelligence and ubiquitous computing, where systems sense presence, adapt behavior, and coordinate invisibly. We are no longer simply using technology; we are living with it.

5.2 From Usability to Humanity

Modern HCI emphasizes human-centered design, an approach that prioritizes meaning, inclusivity, and well-being. The focus has shifted from efficiency to human flourishing — designing systems that support autonomy, creativity, and empathy.

This approach asks profound questions:

- How do we preserve agency when machines predict our behavior?

- How do we maintain authenticity when technology mediates emotion?

- Can interfaces respect human vulnerability rather than exploit it?

HCI thus becomes a moral discipline as much as a technical one — a reflection of what kind of relationship humanity seeks with its creations.

6. Case Studies: Transformative Shifts in HCI

6.1 From Desktop to Mobile: The Touch Revolution

The shift from desktop computing to mobile touchscreens revolutionized interaction by restoring direct manipulation. Swiping, pinching, and tapping reintroduced physical intuition, creating an embodied sense of control absent from keyboards.

Apple’s iPhone, released in 2007, epitomized this change — integrating hardware, software, and gesture into a single seamless experience. Touch interfaces blurred the separation between user and device, making digital communication feel natural.

6.2 Conversational AI: Redefining Interaction through Dialogue

Conversational agents like ChatGPT, Alexa, or Cortana mark another leap: interaction as language exchange. Instead of operating menus, users can now express intentions conversationally — “What’s the weather?” or “Summarize this text.”

Language-based HCI enables semantic understanding, allowing AI to adapt to tone, intent, and context. Yet, it also raises questions about dependence, emotional attachment, and truth — as users increasingly perceive machines as social actors.

7. The Cognitive and Emotional Implications

As interfaces grow more intelligent and empathetic, they begin to shape cognition itself. Studies show that interface design affects how people think, remember, and make decisions. Autocomplete shapes language; recommendation systems influence taste; emotional AI can reinforce or relieve stress.

HCI thus operates as cognitive architecture — structuring how humans engage with knowledge, attention, and emotion. The next frontier of interaction design will not only respond to human psychology but actively co-create it.

8. The Future of HCI: Toward Invisible and Intuitive Systems

8.1 Beyond Screens

Future interfaces may disappear altogether. With augmented reality (AR), brain–computer interfaces (BCI), and wearable computing, interaction may occur through thought, gaze, or even neural impulses. The goal is ultimate transparency — technology that disappears into experience.

Companies like Neuralink and Meta are exploring direct neural interaction, bypassing external input altogether. Such developments blur the line between user and system, raising both exciting and existential questions about autonomy and identity.

8.2 The Moral Frontier of Interaction

As HCI becomes intimate, ethics becomes inseparable from design. Privacy, consent, and mental health must be built into every layer of interaction. Emotion-aware and predictive systems hold immense power — and therefore immense responsibility.

The future of HCI must be grounded not only in innovation but in human values. Technology must adapt to people — not people to technology.

Conclusion: The Dialogue Continues

From command lines to emotion-aware systems, the evolution of Human–Computer Interaction reflects humanity’s ongoing desire to make machines more like ourselves. Yet the deeper lesson is not that computers are learning to be human — it is that humans are learning to understand themselves through technology.

Every interface is a mirror, reflecting our cognitive habits, emotional depth, and ethical dilemmas. As we move toward a world where machines can predict, feel, and respond, the challenge will not be technological but philosophical: How do we remain human in the presence of machines that understand us better than we understand ourselves?

HCI, at its best, is not about control or automation but about communication, empathy, and shared intelligence. The future of interaction will not end with smarter systems, but with wiser relationships — where technology amplifies, rather than replaces, the essence of being human.